For decades, the foundation of our digital world has been the ubiquitous silicon chip. From the powerful processors in our smartphones to the vast data centers powering the internet, silicon-based semiconductors have driven the relentless march of technological progress, largely adhering to Moore’s Law. However, as we approach the physical limits of miniaturization and the fundamental laws of physics begin to present formidable challenges, the search for the next frontier in computing has become an urgent quest. The future of computing promises to transcend the limitations of traditional silicon, venturing into realms that harness quantum mechanics, biological processes, and entirely new material sciences. This exploration beyond silicon chips is not merely an incremental improvement; it’s a revolutionary leap towards unlocking unprecedented computational power, efficiency, and capabilities that will redefine every aspect of our lives.

The End of an Era? Understanding Silicon’s Limits

To truly appreciate the urgency and innovation driving the search for post-silicon computing, it’s crucial to understand why our reliance on traditional semiconductors is facing an inevitable plateau.

A. The Reign of Silicon and Moore’s Law

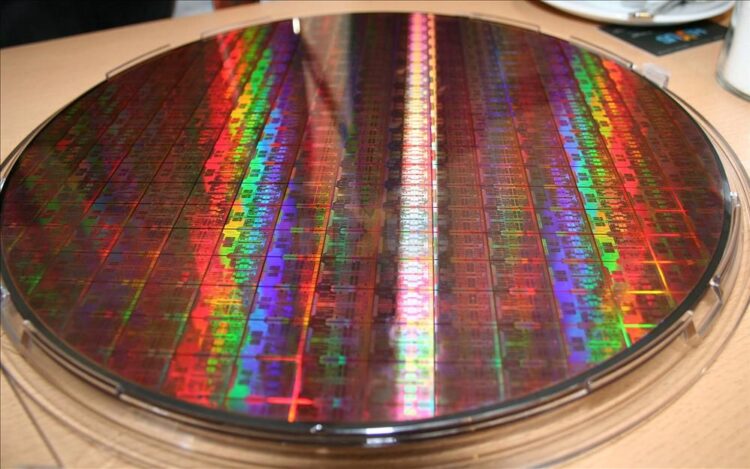

Since the mid-20th century, silicon has been the undisputed king of computing. Its semiconducting properties, abundance, and the ability to fabricate incredibly intricate circuits have fueled exponential growth.

- Moore’s Law: Coined by Gordon Moore in 1965, this observation predicted that the number of transistors on a microchip would double approximately every two years. This relentless scaling has driven unprecedented increases in computational power and corresponding decreases in cost and size.

- Transistor Miniaturization: Engineers have pushed the boundaries of physics, shrinking transistors to sizes approaching individual atoms. This miniaturization has been the primary engine of Moore’s Law.

- Ubiquitous Impact: Silicon chips are the invisible backbone of modern society, enabling everything from personal computers and mobile devices to advanced medical equipment, artificial intelligence, and global communication networks.

B. Confronting the Physical and Economic Barriers

Despite its remarkable run, silicon’s dominance is facing fundamental constraints that signal a looming slowdown, if not an outright end, to Moore’s Law’s historical pace.

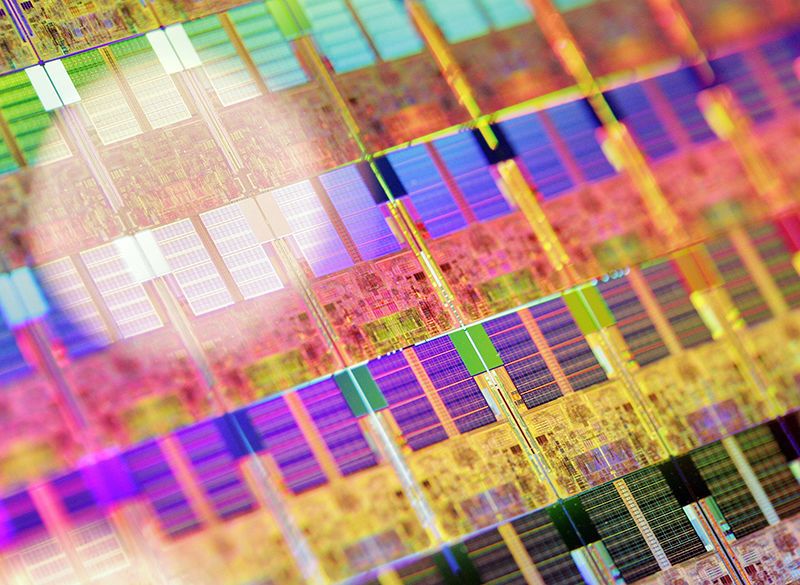

- Atomic Limits: Transistors are now so small (approaching 2-3 nanometers) that they are reaching the atomic scale. At this level, quantum effects (like quantum tunneling, where electrons can spontaneously pass through barriers) begin to interfere with reliable operation, leading to increased leakage and unpredictable behavior. We simply cannot make them much smaller.

- Heat Dissipation: As more transistors are crammed into a smaller space, the amount of heat generated per unit area increases exponentially. Dissipating this heat efficiently becomes a monumental engineering challenge, requiring sophisticated cooling systems that consume significant energy and add complexity.

- Power Consumption: Miniaturization and increased density lead to higher power consumption. The energy required to cool chips and power massive data centers is becoming unsustainable, both economically and environmentally.

- Manufacturing Costs: Fabricating chips at such tiny scales requires incredibly precise and expensive machinery (e.g., Extreme Ultraviolet Lithography). The cost of building new fabrication plants (fabs) has skyrocketed, making it increasingly difficult for companies to justify the investment for diminishing returns in performance gains.

- Interconnect Bottlenecks: As transistors shrink, the wires connecting them (interconnects) also become smaller. This leads to increased resistance and capacitance, which slows down the signal propagation, becoming a major bottleneck that limits overall chip performance, even if individual transistors are faster.

These interwoven challenges indicate that while silicon will remain foundational for many years, the rate of traditional performance improvements is slowing, forcing researchers to look for fundamentally new ways to compute.

The Dawn of New Computing Paradigms: Beyond Traditional Transistors

The quest for future computing is leading researchers down diverse and exciting paths, exploring entirely new physical principles and material sciences to create the next generation of processors.

A. Quantum Computing: Harnessing Subatomic Realities

Quantum computing represents the most radical departure from classical silicon-based computation. Instead of relying on bits that are either 0 or 1, quantum computers use qubits which can represent 0, 1, or both simultaneously (superposition), and can be entangled with other qubits.

- Qubits and Superposition: The fundamental unit of quantum information, a qubit, leverages quantum mechanical phenomena like superposition, allowing it to exist in multiple states at once. This exponentially increases the information density compared to classical bits.

- Entanglement: Qubits can become ‘entangled,’ meaning their states are linked, regardless of distance. This allows complex calculations where the state of one qubit instantly affects the others, enabling parallel processing far beyond classical computers.

- Solving Intractable Problems: Quantum computers are not meant to replace classical computers for everyday tasks. Instead, they are designed to solve specific, highly complex problems that are intractable for even the most powerful supercomputers, such as:

- Drug Discovery and Material Science: Simulating molecular structures and chemical reactions at the quantum level to design new drugs, catalysts, and materials.

- Optimization Problems: Solving complex logistics, financial modeling, and supply chain optimization challenges.

- Cryptography: Breaking current encryption methods (e.g., RSA) and developing new, quantum-safe encryption.

- Challenges: Building stable qubits is extremely difficult, requiring cryogenic temperatures or ultra-high vacuums. Error rates are high, and scaling to a large number of stable qubits is a major engineering hurdle. The field is still in its early stages but holds immense promise.

B. Neuromorphic Computing: Mimicking the Brain

Neuromorphic computing takes inspiration directly from the human brain’s structure and function, aiming to build chips that process information more like biological neural networks rather than traditional von Neumann architectures (which separate processing and memory).

- In-Memory Computing: Unlike classical computers that constantly move data between the CPU and memory (the ‘von Neumann bottleneck’), neuromorphic chips integrate processing and memory elements, significantly reducing energy consumption and increasing speed for AI workloads.

- Spiking Neural Networks: These chips often implement spiking neural networks, where neurons ‘fire’ (produce a ‘spike’) only when certain conditions are met, mimicking biological neurons. This event-driven approach is incredibly energy-efficient for sparse, real-time data processing.

- Pattern Recognition and AI: Neuromorphic chips are exceptionally well-suited for artificial intelligence tasks, particularly real-time pattern recognition, sensory data processing, and machine learning applications that require massive parallelism and energy efficiency (e.g., edge AI, robotics, autonomous vehicles).

- Emerging Technologies: Research involves new materials like memristors (resistors with memory) that can store and process information simultaneously, and optical computing approaches that use light instead of electrons for faster, more energy-efficient communication.

- Challenges: Programming neuromorphic chips requires new algorithms and programming models, and the architectures are still nascent. Integrating them into existing software stacks is also a significant challenge.

C. Optical Computing: The Speed of Light

Optical computing explores the use of photons (light particles) instead of electrons to perform computations. Light can travel faster, generate less heat, and allow for parallel processing through different wavelengths.

- Photonic Circuits: Instead of traditional electronic circuits, optical computers use photonic circuits where data is transmitted and processed by light.

- Reduced Heat and Power: Photons generate virtually no heat during transmission, drastically reducing power consumption and cooling requirements compared to electronic circuits.

- Higher Bandwidth and Speed: Light signals can carry significantly more data and travel faster than electrical signals, potentially leading to much higher throughput and lower latency, particularly for inter-chip communication.

- Applications: Initially, optical computing might find its niche in specialized areas like high-speed data centers, telecommunications, and AI accelerators where moving large amounts of data quickly is paramount.

- Challenges: Miniaturizing optical components, integrating them with existing electronic systems, and manufacturing them reliably at scale are major engineering hurdles. Converting electrical signals to optical and back also introduces overhead.

D. DNA Computing: Harnessing Biological Processes

DNA computing uses DNA molecules to perform computations. The vast number of molecules in a small test tube allows for massive parallelism.

- Molecular Parallelism: DNA strands can be designed to encode information and perform operations (like additions or subtractions) through chemical reactions. The sheer number of molecules in a solution means billions of computations can occur simultaneously.

- Energy Efficiency: DNA computing operates at extremely low energy levels compared to electronic computers.

- Applications: Potentially useful for solving complex combinatorial problems (e.g., the traveling salesman problem), and potentially for highly specialized biological or medical applications within a cellular environment.

- Challenges: Extremely slow compared to electronic computers (takes days or weeks for results), difficult to control precisely, and currently limited to very specific types of problems. It’s more of a research curiosity than a mainstream computing paradigm for now.

E. Spintronics: Beyond Charge, Using Spin

Spintronics (spin transport electronics) proposes using the intrinsic angular momentum of electrons (their ‘spin’) in addition to their electrical charge to store and process information.

- Non-Volatile Memory: Spintronic devices like MRAM (Magnetoresistive RAM) can retain data even when power is off, offering extremely fast, non-volatile memory that could replace traditional RAM and flash storage, eliminating cold boot times.

- Lower Power Consumption: Switching the spin state requires less energy than moving charge, leading to more energy-efficient devices.

- Higher Speed: Spin manipulation can be faster than charge manipulation.

- Applications: Potential for next-generation memory, highly energy-efficient processors, and specialized logic devices.

- Challenges: Fabricating reliable spintronic devices at scale and integrating them with existing silicon technology is a complex engineering task. Maintaining spin coherence is also difficult.

Key Enablers and Drivers for Future Computing Paradigms

The transition beyond silicon chips won’t happen in isolation. Several technological advancements and societal demands are acting as key enablers and powerful drivers for these new computing frontiers.

A. Advanced Materials Science

The ability to engineer new materials with tailored properties is fundamental to many of these emerging computing paradigms.

- 2D Materials: Graphene, molybdenum disulfide (MoS2), and other 2D materials offer extraordinary electronic, optical, and thermal properties that could surpass silicon for certain applications, enabling thinner and more flexible devices.

- Topological Insulators: Materials that conduct electricity on their surface but act as insulators in their bulk, promising highly efficient electron transport without energy loss.

- Memristors: Resistors with memory, whose resistance changes based on the history of current flow. These are crucial for neuromorphic computing due to their ability to combine memory and processing.

- Superconductors: Materials that conduct electricity with zero resistance at very low temperatures. While challenging to use, they are critical for some qubit technologies in quantum computing.

B. Artificial Intelligence and Machine Learning

The insatiable demand for processing complex AI workloads is a primary driver for new computing architectures.

- AI Accelerators: Specialized chips designed specifically for AI tasks (e.g., GPUs, TPUs) are already pushing the limits of silicon. Future computing paradigms like neuromorphic and optical computing promise even more efficient and powerful AI processing at massive scales.

- Machine Learning for Design: AI itself is being used to design new chips and materials, accelerating the discovery and optimization of novel computing components.

- Edge AI: The need to perform AI computations directly on devices (e.g., autonomous vehicles, IoT sensors) without relying on cloud connectivity drives the demand for extremely energy-efficient and compact AI-optimized processors, a natural fit for neuromorphic approaches.

C. Big Data and Data-Centric Computing

The explosion of data from diverse sources (IoT, sensors, social media, scientific instruments) necessitates new computing approaches.

- Data Movement Bottlenecks: Traditional architectures struggle with the sheer volume of data movement between memory and processing units. New architectures that integrate memory and processing (like neuromorphic) or process data in entirely new ways (like quantum) aim to overcome this.

- In-Memory Processing: Technologies that enable computations directly within or very close to memory reduce the need for constant data transfer, drastically improving efficiency for data-intensive tasks.

- Specialized Processors: As data becomes more diverse, specialized processors optimized for specific data types or processing patterns (e.g., graph processing units, vector processors) will become more common, often leveraging post-silicon technologies.

D. Advanced Manufacturing Techniques

The ability to precisely fabricate these new, complex materials and architectures is crucial.

- Atomic Layer Deposition (ALD): Extremely precise thin-film deposition techniques that allow for building structures one atomic layer at a time.

- 3D Printing (Additive Manufacturing): For certain components or architectures, advanced 3D printing techniques could enable novel chip designs that are impossible with traditional lithography.

- Self-Assembly: Research into self-assembling molecular structures could potentially lead to highly complex and dense computing components.

E. Interdisciplinary Research and Collaboration

The challenges of future computing are too vast for any single discipline. Progress relies heavily on intense collaboration between:

- Physics and Material Science: To discover and engineer new materials with desired properties.

- Computer Science and Engineering: To design architectures, develop programming models, and build prototypes.

- Mathematics: To develop new algorithms that can fully leverage the unique capabilities of quantum or neuromorphic computers.

- Industry and Academia: Partnerships are vital for translating fundamental research into practical, scalable technologies.

Challenges on the Path Beyond Silicon

While the promise of future computing is immense, the road ahead is fraught with significant technical, economic, and societal challenges that must be overcome.

A. Fundamental Engineering Hurdles

Building these new computers from the ground up presents enormous engineering difficulties.

- Scalability: Many promising technologies (especially quantum computing) face immense challenges in scaling from a few experimental units to a stable, error-corrected system with a large number of processing elements.

- Stability and Coherence: Maintaining the delicate quantum states of qubits (coherence) or the precise spin states in spintronics is incredibly difficult, as they are highly susceptible to environmental noise and interference.

- Error Correction: The inherent fragility of these new computing methods leads to high error rates. Developing robust error correction mechanisms is crucial but adds significant overhead and complexity.

- Manufacturing at Scale: Developing reliable, cost-effective, and scalable manufacturing processes for entirely new materials and architectures (e.g., self-assembling DNA structures, atomically precise spintronic devices) is a monumental task that will require decades of R&D.

B. Software and Algorithm Development

The new hardware architectures demand entirely new ways of thinking about software and algorithms.

- New Programming Paradigms: Classical programming languages and compilers are ill-suited for quantum or neuromorphic computers. Developers need new programming languages, frameworks, and tools to interact with these novel architectures.

- Algorithm Discovery: For many problems, we don’t yet have algorithms that can fully leverage the unique strengths of quantum or neuromorphic computing. Discovering and optimizing these ‘quantum algorithms’ or ‘neuromorphic algorithms’ is a significant research area.

- Developer Adoption: Even with new tools, convincing developers to learn fundamentally different ways of programming and thinking about computation will be a gradual process.

C. Economic and Infrastructural Investment

The transition to post-silicon computing will require colossal financial and infrastructural investments.

- Research and Development Costs: Developing entirely new computing technologies from basic science to commercialization is incredibly expensive and carries high risks.

- Infrastructure Rebuild: Building the necessary fabrication plants, research facilities, and specialized cooling/power infrastructure for these new systems will require unprecedented levels of investment from governments and private industry.

- Cost of Ownership: Early quantum computers or specialized neuromorphic systems will be extremely expensive to acquire, operate, and maintain, limiting their initial accessibility to large research institutions and corporations.

D. Integration with Existing Systems

The world runs on silicon. Integrating new computing paradigms seamlessly into existing IT infrastructure, software stacks, and cloud ecosystems will be a complex and gradual process. It’s more likely to be a hybrid future, where specialized post-silicon accelerators augment classical systems, rather than a complete replacement.

E. Ethical, Social, and Security Implications

As with any transformative technology, future computing raises profound ethical, social, and security questions.

- AI Power and Control: Neuromorphic computing could lead to incredibly powerful and efficient AI. This raises questions about control, bias, and the potential for autonomous systems.

- Privacy and Surveillance: Enhanced computational power could enable unprecedented surveillance capabilities or the processing of vast amounts of sensitive personal data, raising privacy concerns.

- Quantum Cryptography: While quantum computing threatens current encryption, it also offers solutions. The ‘quantum safe’ transition is a major security challenge for governments and businesses worldwide.

- Job Market Impact: New computing paradigms will create new jobs but could also displace existing ones, necessitating workforce retraining and adaptation strategies.

- Global Competition: The race for quantum supremacy and other future computing advantages is a geopolitical issue, with nations investing heavily to gain a strategic edge.

The Road Ahead: How Future Computing Will Unfold

The transition beyond silicon will not be a sudden revolution but a gradual evolution. The future computing landscape will likely be heterogeneous, with different technologies excelling at different tasks.

A. Hybrid Computing Architectures

The most probable scenario is a hybrid computing future. Traditional silicon chips will continue to handle most general-purpose computing tasks for a long time due to their versatility and low cost. However, they will be augmented by specialized accelerators built on new paradigms.

- Classical + Quantum: Quantum computers will act as co-processors, solving specific, intractable problems that classical computers cannot handle, with results then fed back to the classical system.

- Classical + Neuromorphic: Neuromorphic chips will excel at AI workloads, pattern recognition, and edge computing, offloading these tasks from traditional CPUs and GPUs, particularly for energy-constrained devices.

- Classical + Optical: Optical interconnects will be crucial for high-speed data transfer between chips and within data centers, potentially leading to hybrid optoelectronic chips.

This means architects and engineers will need to design systems that seamlessly integrate these diverse computing elements.

B. Specialization and Domain-Specific Processors

As traditional scaling slows, the industry will pivot further towards specialized and domain-specific processors. Instead of general-purpose improvements, future chips will be highly optimized for specific workloads:

- AI/ML Accelerators: Continual innovation in chips designed purely for neural network inference and training.

- Data Processing Units (DPUs): Dedicated processors for handling networking, storage, and security tasks, freeing up CPUs.

- Biotech/Drug Discovery Accelerators: Chips specifically designed for molecular simulations.

- Cryptocurrency Mining Chips: Further evolution of ASICs (Application-Specific Integrated Circuits) for specialized computations.

These specialized chips may incorporate elements of post-silicon technologies where they offer a decisive advantage.

C. Advances in Material Science and Fabrication

Continuous breakthroughs in materials science and advanced fabrication techniques will be critical.

- New Transistor Materials: Beyond silicon, research into materials like Gallium Nitride (GaN) and Silicon Carbide (SiC) for power electronics, and 2D materials for ultra-small transistors, will continue to yield incremental improvements.

- 3D Stacking and Advanced Packaging: Stacking multiple layers of chips vertically (3D integration) and advanced packaging techniques will allow for higher transistor densities and shorter interconnects, pushing performance without shrinking individual transistors further.

- Self-Assembling Nanostructures: Long-term research into molecular self-assembly could enable entirely new ways to build complex computing structures from the bottom up.

D. Software Innovations for New Hardware

The development of new software paradigms, compilers, and programming tools will be crucial for making these nascent technologies accessible and usable for a broader range of developers. This includes:

- Quantum Software Development Kits (SDKs): Making it easier to write and test quantum algorithms.

- Neuromorphic Programming Frameworks: Abstractions that allow developers to leverage brain-inspired chips without needing deep neuroscience expertise.

- Compilers and Optimizers: Intelligent compilers that can map classical code or high-level abstract logic onto specialized hardware architectures.

E. Edge Computing as a Key Driver

The explosion of IoT devices and the need for immediate processing closer to the data source will increasingly drive demand for energy-efficient, compact, and powerful specialized processors at the edge. Neuromorphic computing, in particular, could find a strong early foothold in this domain, enabling autonomous devices to make real-time decisions without constant cloud connectivity.

Conclusion

The silicon chip, a marvel of human ingenuity, has served as the bedrock of the digital age for over half a century. Its journey, guided by the relentless pace of Moore’s Law, has brought us to an era of unprecedented computational power. However, as we stand at the precipice of its fundamental physical and economic limits, the quest for future computing beyond silicon chips is not merely an academic exercise but a strategic imperative.

This journey is leading us into fascinating new frontiers: the mind-bending principles of quantum mechanics, the intricate, energy-efficient architecture of the human brain, the boundless speed of light, and the untapped potential of novel materials. While each of these paradigms presents its own unique and formidable challenges in terms of engineering, software development, and massive investment, they collectively promise to unlock computational capabilities that will solve problems currently deemed impossible, revolutionize industries from medicine to AI, and redefine our relationship with technology.

The future of computing will likely be a diverse and hybrid landscape, where specialized processors augment traditional systems, and where innovation is driven by interdisciplinary collaboration and a relentless pursuit of efficiency and intelligence. The transition will be gradual, but the destination is clear: a world powered by computations that transcend the limitations of the past, truly moving beyond silicon chips to engineer the very fabric of tomorrow’s digital existence.