The relentless pursuit of more powerful and efficient computing has led humanity from vacuum tubes to transistors, and from microprocessors to parallel architectures. Yet, even our most advanced conventional computers, built on the von Neumann architecture, struggle with the energy demands and inherent limitations of processing massive, unstructured data—precisely the kind of data our brains handle effortlessly. This fundamental challenge is giving rise to neuromorphic chips, a revolutionary class of hardware designed to mimic the brain’s structure and function. By moving beyond traditional computing’s separate processing and memory units, these brain-inspired chips promise to unlock unprecedented capabilities in AI, sensor data processing, and ultra-low-power edge computing, truly redefining the future of computation.

The Bottleneck: Why Conventional Computing Struggles with AI

To understand the profound promise of neuromorphic computing, it’s crucial to first grasp the limitations of our current computational paradigm, particularly when faced with the demands of artificial intelligence.

A. The Von Neumann Bottleneck: A Fundamental Architectural Limit

Virtually all modern computers are built on the von Neumann architecture, developed by John von Neumann in the 1940s. While incredibly successful, it has a fundamental flaw when it comes to certain types of computation, especially those involving large datasets and complex pattern recognition.

- Separate Memory and Processor: In a von Neumann architecture, the central processing unit (CPU) and memory (RAM) are distinct components. Data must constantly move back and forth between them to be processed.

- The Bottleneck Itself: This constant movement of data creates a “bottleneck” at the data bus. The CPU often spends more time waiting for data to arrive from memory than it does actually processing it. This significantly limits computational speed and efficiency, particularly for data-intensive tasks.

- High Energy Consumption: Moving data is energy-intensive. As AI models grow larger and require processing vast amounts of data, the energy consumed by data transfer between CPU and memory becomes a dominant factor in power consumption, making such systems unsuitable for power-constrained devices.

- Sequential Processing: Von Neumann machines are fundamentally sequential processors. While they can achieve parallelism, their core operation involves fetching instructions and data one after another, which is inefficient for highly parallel, distributed computations like those performed by neural networks.

B. The Demands of Modern Artificial Intelligence (AI)

The recent explosion in AI capabilities, particularly deep learning, has pushed conventional computing to its limits, exacerbating the von Neumann bottleneck.

- Massive Datasets: Training cutting-edge AI models (e.g., large language models, sophisticated image recognition) requires processing datasets that comprise terabytes or even petabytes of information. This necessitates immense data movement.

- Parallel Operations: Neural networks, the backbone of modern AI, are inherently parallel. They consist of millions or billions of interconnected “neurons” that perform simple operations simultaneously. Conventional CPUs, despite their power, are not natively optimized for this highly parallel, distributed workload.

- Inference at the Edge: As AI moves from data centers to edge devices (e.g., smartphones, IoT sensors, autonomous vehicles), the need for low-power, real-time AI inference becomes critical. Conventional chips struggle to deliver AI performance in such power-constrained environments due to their high energy demands.

- Learning and Adaptation: Traditional computers excel at executing precise, pre-programmed instructions. They are not naturally designed for continuous learning and adaptation, which is fundamental to biological intelligence and many advanced AI applications.

These challenges highlight the urgent need for a new computational architecture—one that can process data efficiently, handle parallel operations natively, and operate with vastly reduced power consumption, precisely what neuromorphic chips aim to achieve.

Core Concepts of Neuromorphic Computing: Inspired by the Brain

Neuromorphic computing fundamentally breaks away from the von Neumann architecture by drawing direct inspiration from the biological brain’s structure and operational principles. It’s about building computers that think more like brains.

A. In-Memory Computing (Processing-in-Memory)

The most radical departure from von Neumann is the integration of processing directly into or very close to the memory units.

- Co-located Processing and Memory: Instead of separate CPU and RAM, neuromorphic chips interleave computation directly with storage elements. This dramatically reduces the distance data needs to travel, effectively bypassing the von Neumann bottleneck.

- Reduced Data Movement: By processing data where it resides, the energy consumed by moving data between processor and memory is drastically cut down, leading to far greater power efficiency.

- Parallel and Distributed Processing: Each memory unit (or “neuron”) can have its own local processing capability, allowing for highly parallel and distributed computation, mirroring how neurons operate in the brain.

B. Spiking Neural Networks (SNNs)

Unlike traditional Artificial Neural Networks (ANNs) used in deep learning, which typically process data in synchronous batches, neuromorphic chips are designed to implement Spiking Neural Networks (SNNs).

- Event-Driven Communication (Spikes): In SNNs, neurons only “fire” (or “spike”) when a certain threshold of electrical activity is reached. Information is encoded in the timing and frequency of these spikes, similar to how biological neurons communicate. This is in contrast to ANNs where information is transmitted as continuous values.

- Sparsity and Energy Efficiency: Because neurons only activate and communicate when there’s an “event” (a spike), the network can be highly sparse in its activity. Most neurons are often silent. This “event-driven” or “sparse” activity translates directly into incredibly low power consumption, as energy is only expended when computation is actually happening.

- Temporal Dynamics: SNNs inherently handle time-varying information, making them well-suited for processing real-time sensor data, audio, and video where temporal relationships are crucial.

C. Synapses and Neurons as Basic Building Blocks

Neuromorphic chips are designed with fundamental computational units that mimic biological neurons and synapses.

- Artificial Neurons: These are the processing units. Each artificial neuron accumulates inputs from other neurons, and if the sum exceeds a threshold, it generates an output “spike.”

- Artificial Synapses: These are the memory elements, representing the connections between neurons. Each synapse stores a weight (a numerical value) that modulates the strength of the signal passed between neurons. Crucially, these synapses are often designed to be memristors or other emerging non-volatile memory technologies.

- Learning Through Synaptic Plasticity: Just like in biological brains, the “learning” process in neuromorphic chips involves modifying the strength of these synaptic connections. This “synaptic plasticity” means the memory itself is adaptive and learns, rather than being static storage. This is a form of in-memory learning.

D. Analog and Mixed-Signal Computation

While conventional computers are almost exclusively digital (processing 0s and 1s), many neuromorphic designs incorporate analog or mixed-signal (analog-digital hybrid) computation.

- Energy Efficiency of Analog: Analog computation can be significantly more energy-efficient for certain types of operations (e.g., multiplication and addition inherent in neural networks) because it avoids the constant digital conversion and precise voltage referencing.

- Approximation and Robustness: Analog circuits inherently deal with continuous values and can be more tolerant to noise and variations, similar to biological systems. This often means less precision but greater efficiency and robustness for tasks like pattern recognition.

- Beyond Binary: Neuromorphic systems often don’t rely on strict binary logic. Information can be represented by various properties of the spikes (timing, frequency), offering a richer information encoding.

These core concepts collectively enable neuromorphic chips to process information with unparalleled power efficiency and parallel capabilities, offering a fundamentally different approach to computation.

Leading Neuromorphic Architectures and Projects

The development of neuromorphic chips is a burgeoning field, with several major players and research initiatives pushing the boundaries of brain-inspired computing.

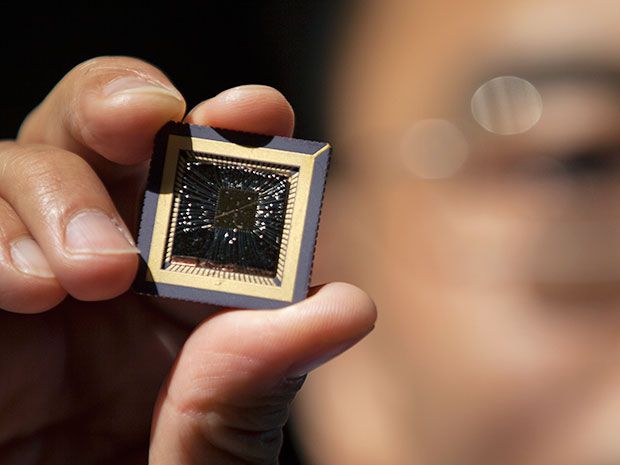

A. IBM TrueNorth

One of the pioneering large-scale neuromorphic chips, IBM TrueNorth, was developed as part of DARPA’s SyNAPSE program.

- Architecture: It features 4,096 cores, each containing 256 “neurons” and 256 “synapses,” totaling 1 million neurons and 256 million synapses on a single chip. It is designed for low power consumption.

- Spiking Neural Network (SNN): TrueNorth is designed to run SNNs, where neurons communicate via asynchronous spikes.

- Applications: Primarily targeted for real-time sensor data processing, pattern recognition, and image/video analysis, particularly at the edge. It excels in tasks that map well to sparse, event-driven computation.

- Strengths: Extremely low power consumption (operates on milliwatts), suitable for always-on devices.

B. Intel Loihi (and Loihi 2)

Intel Loihi is another prominent neuromorphic research chip, built by Intel Labs, with its successor, Loihi 2, released in 2021.

- Architecture: Loihi features 128 “neuromorphic cores,” each with 1,024 artificial neurons, for a total of 131,072 neurons and 130 million synapses per chip. Loihi 2 significantly increases density and performance.

- Programmability: Designed to be highly programmable, allowing researchers to experiment with various SNN models and learning rules.

- Learning Capabilities: Supports different learning mechanisms, including unsupervised learning, reinforcement learning, and spike-timing dependent plasticity (STDP), which mimics how synapses strengthen or weaken based on the timing of pre- and post-synaptic neuron firing.

- Applications: Intel envisions Loihi being used for constraint satisfaction problems, real-time control, pattern matching, gesture recognition, and other AI tasks where power efficiency and real-time adaptation are key.

C. BrainChip Akida

BrainChip Akida is a commercial neuromorphic processor designed for edge AI applications.

- Architecture: It is a neural network processor (NNP) that leverages SNN principles for efficient event-domain AI. It’s designed for low power consumption and on-chip learning.

- Edge Focus: Specifically targets embedded systems and edge devices where power and size constraints are critical.

- On-Chip Learning: A key feature is its ability to perform incremental, on-chip learning, allowing models to adapt to new data without needing to be retrained in the cloud.

- Applications: Used for always-on AI applications like voice recognition, gesture control, anomaly detection, and sensory processing in IoT devices.

D. European Human Brain Project (HBP) / SpiNNaker and BrainScaleS

European research efforts, particularly within the Human Brain Project (HBP), have produced significant neuromorphic hardware.

- SpiNNaker (Spiking Neural Network Architecture): Developed at the University of Manchester, SpiNNaker is a massive parallel computing platform specifically designed to simulate large-scale SNNs in real-time. It uses ARM processors connected by a custom packet-switched network, allowing for massive numbers of virtual neurons.

- BrainScaleS (Brain-inspired computing system, Analog Neuromorphic Hardware): Developed at Heidelberg University, BrainScaleS uses physical models of neurons and synapses implemented in analog circuits on wafers. It operates much faster than real time, allowing for rapid exploration of neural dynamics.

- Purpose: Both are primarily research platforms aimed at understanding the brain and developing new algorithms and hardware for brain-inspired computing.

These projects highlight the diverse approaches and growing momentum in the field of neuromorphic computing, each contributing to a future where AI processing becomes vastly more efficient and pervasive.

Transformative Advantages of Neuromorphic Chips

The unique architecture of neuromorphic chips offers several compelling advantages that address the fundamental limitations of conventional computing, particularly for AI workloads.

A. Unprecedented Power Efficiency

This is arguably the most significant advantage. Neuromorphic chips consume dramatically less power than traditional CPUs or GPUs, especially when processing sparse or event-driven data.

- In-Memory Processing: By reducing the need to constantly shuttle data between separate memory and processing units, the energy overhead associated with data movement—a major power drain in von Neumann architectures—is drastically minimized.

- Event-Driven (Sparse) Activity: In SNNs, neurons only consume power when they “spike” (i.e., when there’s an event). In contrast, conventional processors constantly clock and draw power even when idle or processing uniform data. This sparse, activity-dependent power consumption is highly efficient for many real-world sensor data streams (e.g., camera feeds where only a small portion of the image changes).

- Analog Computation: Where implemented, analog circuits can perform certain neural network operations with inherent energy efficiency compared to their digital counterparts, requiring fewer transistors and less precise voltage control.

This ultra-low power consumption makes neuromorphic chips ideal for edge devices, battery-powered systems, and always-on AI applications where energy budgets are extremely tight.

B. High Parallelism and Scalability for AI Workloads

Neuromorphic architectures are inherently parallel, mimicking the brain’s distributed processing capabilities.

- Massive Parallelism: Millions or billions of artificial neurons and synapses can perform simple computations simultaneously and independently. This makes them naturally optimized for the parallel computations inherent in neural networks, especially SNNs.

- Direct Mapping of Neural Networks: The architecture directly maps to the structure of neural networks, leading to more efficient execution of AI algorithms compared to mapping them onto conventional, general-purpose processors.

- Scalability: The modular nature of some neuromorphic designs allows for scaling by adding more chips or cores, akin to how biological brains grow in complexity.

C. Enhanced Learning and Adaptability Capabilities

Unlike traditional deep learning chips that are primarily optimized for inference (applying a pre-trained model), many neuromorphic chips are designed with on-chip learning capabilities.

- On-Chip Learning: The ability to adapt and learn from new data directly on the device, without requiring offloading to a cloud server for retraining. This is crucial for real-time adaptation in dynamic environments.

- Unsupervised and Online Learning: Neuromorphic systems are well-suited for unsupervised learning (finding patterns in unlabeled data) and online learning (learning continuously from a stream of data), mimicking the brain’s ability to learn without explicit labels or prior knowledge.

- Spike-Timing Dependent Plasticity (STDP): Many neuromorphic chips implement STDP, a biologically inspired learning rule where the timing of neural spikes determines how synaptic connections are strengthened or weakened. This enables efficient, localized learning.

This adaptability allows AI models to evolve and improve in real-world scenarios, making them more robust and intelligent.

D. Real-Time Processing for Sensor Data

The event-driven nature of SNNs makes neuromorphic chips exceptionally good at processing asynchronous, continuous streams of sensor data.

- Efficient Event Handling: They respond only to changes or “events” in the data, rather than constantly processing full frames or fixed batches. This is highly efficient for inputs like vision (e.g., detecting motion, specific objects), audio (e.g., voice commands), and haptic sensors.

- Low Latency: The direct processing of events and the in-memory computation lead to very low latency responses, crucial for real-time applications like autonomous driving, robotics, and industrial control.

- Sensor Integration: Designed to integrate seamlessly with various types of sensors, turning raw sensor data directly into actionable insights or control signals.

E. Robustness and Fault Tolerance

Inspired by the brain’s resilience, neuromorphic systems can exhibit a degree of robustness.

- Distributed Nature: The distributed and parallel nature means that the failure of a few “neurons” or “synapses” does not necessarily lead to catastrophic failure of the entire system. Other parts can compensate, similar to how the brain can still function with localized damage.

- Approximation and Tolerance to Noise: Many neuromorphic designs, especially those using analog components, can inherently tolerate some level of noise or imprecision in their operations, mimicking the robustness of biological systems which are not perfectly precise.

These advantages collectively position neuromorphic chips as a game-changer for AI and edge computing, promising to unlock capabilities that are currently unfeasible with conventional hardware.

Challenges and Future Considerations in Neuromorphic Computing

Despite their immense promise, neuromorphic chips face significant challenges that must be overcome for widespread commercial adoption.

A. Programming Model and Algorithm Development

One of the biggest hurdles is the lack of a mature and standardized programming model for neuromorphic chips.

- Different Paradigm: Programming SNNs and neuromorphic hardware is fundamentally different from traditional von Neumann programming. Existing software development tools and expertise are not directly transferable.

- Algorithm Optimization: Many well-established deep learning algorithms (e.g., CNNs, RNNs) are optimized for conventional hardware. Adapting or re-inventing these algorithms to efficiently run on SNNs and neuromorphic architectures is an active area of research.

- Lack of Standardized Frameworks: Unlike the rich ecosystem of TensorFlow or PyTorch for conventional AI, neuromorphic computing lacks widely adopted, standardized software frameworks, making development challenging for researchers and developers.

B. Hardware Manufacturing and Scalability

Manufacturing neuromorphic chips at scale presents its own set of challenges.

- Emerging Technologies: Many neuromorphic designs rely on emerging memory technologies (e.g., memristors, phase-change memory) for synapses, which are still under active research and development, and whose manufacturing processes are not as mature or cost-effective as traditional CMOS.

- Integration Complexity: Integrating billions of neurons and synapses onto a single chip, with complex interconnections and often analog components, poses significant design and manufacturing complexity.

- Yield and Cost: Achieving high manufacturing yields for these novel architectures, especially those with analog components, can be challenging, leading to higher per-chip costs.

C. Benchmarking and Performance Evaluation

Comparing the performance of neuromorphic chips with conventional GPUs or CPUs is often like comparing apples and oranges, making benchmarking difficult.

- Different Metrics: Traditional benchmarks focus on FLOPS (floating-point operations per second) or TOPS (tera operations per second). Neuromorphic chips excel in energy efficiency per ‘spike’ or ‘synaptic operation’, which isn’t directly comparable.

- Specific Use Cases: Neuromorphic chips shine in specific use cases (e.g., sparse, event-driven data). They are not general-purpose CPUs and shouldn’t be benchmarked as such. Developing relevant benchmarks that highlight their unique strengths is crucial.

D. Interfacing with Conventional Systems

Neuromorphic chips are specialized accelerators. They will likely not replace conventional CPUs entirely but will augment them. Efficiently interfacing neuromorphic chips with existing conventional computing systems is a challenge. This involves designing high-bandwidth, low-latency interfaces and ensuring seamless data flow between the two very different architectural paradigms.

E. Biologically Inspired vs. Biologically Constrained

The field must navigate the tension between being biologically inspired (taking ideas from the brain) and being biologically constrained (trying to perfectly replicate the brain’s exact mechanisms). Overly strict adherence to biological details might hinder engineering solutions that are more practical or performant in silicon. Finding the right balance is key.

F. Ethical Implications and Societal Impact

As AI powered by neuromorphic chips becomes more powerful and autonomous, the ethical implications and societal impact will become increasingly prominent. Questions regarding autonomous decision-making, bias in AI models, data privacy, accountability for AI actions, and the future of human-computer interaction will need careful consideration and societal dialogue.

Key Applications and Use Cases for Neuromorphic Chips

The unique capabilities of neuromorphic chips make them ideally suited for a range of demanding applications where conventional computing struggles due to power, latency, or data volume constraints.

A. Edge AI and IoT Devices

This is arguably the most immediate and impactful application area. Neuromorphic chips can bring powerful AI capabilities directly to resource-constrained devices.

- Always-On Sensory Processing: Enabling devices to continuously listen for voice commands, monitor environments for anomalies, or perform gesture recognition with minimal battery drain.

- Smart Sensors: Integrating AI directly into sensors for pre-processing data and extracting relevant features, reducing the need to send raw, large datasets to the cloud (e.g., smart cameras that only send data when specific activity is detected).

- Battery-Powered Devices: Extending the battery life of wearables, drones, remote sensors, and portable medical devices by providing highly efficient on-device AI inference.

B. Autonomous Vehicles and Robotics

The real-time, low-power processing capabilities are crucial for autonomous systems.

- Real-time Sensor Fusion: Efficiently processing and fusing data from multiple sensors (LiDAR, radar, cameras) in real-time to build an accurate perception of the environment for navigation and obstacle avoidance.

- Robotic Control: Enabling robots to react instantly to changes in their environment, learn new motor skills on the fly, and interact more naturally with their surroundings.

- Adaptive AI for Dynamic Environments: Allowing autonomous systems to learn and adapt to unpredictable real-world conditions without constant connectivity to a powerful cloud backend.

C. Data Center Efficiency and High-Performance Computing (HPC)

While primarily known for edge applications, neuromorphic chips can also contribute to more efficient data centers and HPC.

- Specialized AI Accelerators: Offloading specific, power-hungry AI workloads (e.g., recommendation engines, real-time analytics on sparse data) to neuromorphic chips can drastically reduce overall data center energy consumption.

- Neuromorphic Supercomputers: Creating large clusters of neuromorphic chips for simulating massive neural networks or exploring brain-like computations for scientific research.

- Cognitive Workloads: Running computationally intensive AI models for tasks like natural language processing, advanced pattern recognition across vast datasets, or complex simulation.

D. Advanced Human-Computer Interfaces (HCI)

Neuromorphic chips can enable more intuitive and natural ways for humans to interact with computers.

- Brain-Computer Interfaces (BCI): Processing neural signals in real time for direct control of prosthetics, cursors, or communication systems, potentially for individuals with severe disabilities.

- Gesture and Emotion Recognition: Enabling devices to understand complex human gestures, facial expressions, and even subtle emotional cues with greater accuracy and less latency.

- Adaptive Personal Assistants: Powering AI assistants that learn continuously from user interactions and adapt their responses and functionalities in real time, becoming truly personalized.

E. Novel Scientific Research and Brain Understanding

Neuromorphic hardware is a powerful tool for neuroscience and cognitive science research.

- Simulating Biological Brains: Providing platforms to build and run large-scale simulations of neural circuits and entire brain regions, helping neuroscientists understand how the brain processes information, learns, and generates consciousness.

- Developing New AI Algorithms: The unique architecture encourages the development of novel SNN-based AI algorithms that could lead to new breakthroughs beyond what’s possible with current deep learning paradigms.

- Drug Discovery: Simulating neural activity to understand neurological diseases and test the effects of new drugs at a cellular level.

These applications collectively paint a picture of a future where computation is not just faster, but also inherently more intelligent, adaptive, and sustainable.

The Future Trajectory of Brain-Inspired Computing

The field of neuromorphic computing is at a pivotal juncture, moving from fundamental research to more practical commercial applications. Several key trends will define its future.

A. Convergence with Standard AI Frameworks

A major future trend will be the creation of more user-friendly software stacks and the convergence of neuromorphic programming with existing AI frameworks. This means allowing developers to leverage tools like TensorFlow or PyTorch to design SNNs that can then be compiled and run efficiently on neuromorphic hardware, vastly increasing accessibility and accelerating adoption. Abstraction layers will hide the underlying hardware complexity.

B. Hybrid Architectures: Best of Both Worlds

It’s unlikely that neuromorphic chips will completely replace conventional CPUs and GPUs. Instead, the future will likely see the rise of hybrid architectures where neuromorphic processors act as specialized accelerators alongside traditional von Neumann systems. Conventional CPUs will handle general-purpose tasks, while neuromorphic chips will excel at specific, highly parallel, and energy-efficient AI workloads. This ‘heterogeneous computing’ approach will maximize efficiency for diverse tasks.

C. Advanced Materials and Manufacturing Techniques

The continued development of novel materials and advanced manufacturing techniques will be critical.

- Memristor Maturation: As memristors and other non-volatile memory technologies mature, they will become more reliable, scalable, and cost-effective, directly enabling denser and more efficient artificial synapses.

- 3D Integration: Techniques like 3D stacking of chips will allow for even tighter integration of processing and memory, further reducing data movement bottlenecks and increasing neural network density.

- Beyond Silicon: Research into post-silicon materials and computing paradigms (e.g., optical computing, spintronics) could open entirely new avenues for brain-inspired architectures.

D. Edge-Cloud Continuum and Distributed AI

Neuromorphic chips will be a key enabler for the edge-cloud continuum. They will facilitate powerful AI processing directly at the edge, reducing reliance on constant cloud connectivity and minimizing data transfer costs and latency. The cloud will still be used for large-scale training, while neuromorphic chips handle efficient, real-time inference and adaptation on distributed edge devices. This forms a truly distributed AI landscape.

E. Greater Biometric Fidelity and Real-world Learning

Future neuromorphic chips will achieve even higher levels of biological fidelity, enabling more sophisticated brain simulations and more powerful, brain-like AI. This will lead to AI systems that can learn more efficiently from real-world, unlabeled, and noisy data, adapting continuously to novel situations in ways that current systems struggle with. This could unlock advancements in truly autonomous decision-making and general AI.

F. Specialized Chipsets for Specific AI Tasks

Rather than general-purpose neuromorphic chips, we might see the development of highly specialized neuromorphic chipsets tailored for very specific AI tasks or sensory modalities (e.g., chips optimized purely for vision, or for audio processing, or for robotics control). This specialization could push efficiency and performance boundaries even further for niche applications.

Conclusion

Neuromorphic chips represent a profound leap forward in the quest for more efficient, powerful, and brain-like computation. By fundamentally rethinking traditional computer architecture and drawing deep inspiration from the human brain, these innovative chips are poised to overcome the limitations of the von Neumann bottleneck, particularly for the demanding, data-intensive workloads of modern Artificial Intelligence. Their unparalleled power efficiency, native parallelism, and inherent learning capabilities make them ideal candidates for transforming edge AI, autonomous systems, and advanced human-computer interfaces.

While significant challenges remain in programming models, manufacturing, and integration with existing systems, the rapid advancements in this field are undeniable. The future of computing is likely to be a hybrid landscape, where neuromorphic chips augment conventional processors, enabling a new generation of intelligent, adaptive, and sustainable technologies. Ultimately, brain-inspired computing is not just about building better computers; it’s about pushing the boundaries of what’s computationally possible, paving the way for truly intelligent machines that learn, perceive, and interact with the world in ways previously confined to science fiction.